DeepSeek-V2

there is no widely available or publicly documented information about DeepSeek-v2. However, based on the naming convention and the advancements typically associated with version updates in AI models, we can speculate on what DeepSeek v2 might entail.

Model Architecture

What DeepSeek v2 Could Be

| Model | Context Length | Download |

|---|---|---|

| DeepSeek V2 | 128k | 🤗 HuggingFace |

| DeepSeek V2-Chat (RL) | 128k | 🤗 HuggingFace |

DeepSeek-v 2 is likely an upgraded version of the original DeepSeek LLM (Large Language Model). It would build upon the foundation of its predecessor, incorporating improvements in performance, efficiency, and functionality. Here are some potential features and enhancements that DeepSeek v2 might offer:

1. Enhanced Performance

- Improved Accuracy: DeepSeek-v 2 could feature better contextual understanding and more accurate responses, thanks to advancements in training techniques and larger datasets.

- Faster Processing: Optimizations in the model architecture could lead to faster inference times, making it more suitable for real-time applications.

Context Window

2. Expanded Multilingual Support

- More Languages: DeepSeek-v2 might support an even broader range of languages, making it more versatile for global applications.

- Better Language Understanding: Enhanced capabilities in understanding nuances, idioms, and cultural context across different languages.

API Platform

3. Greater Customizability

- Fine-Tuning Options: DeepSeek-v 2 could offer more robust fine-tuning capabilities, allowing businesses to tailor the model to their specific needs.

- Domain-Specific Adaptations: Improved support for specialized domains like healthcare, finance, legal, and education.

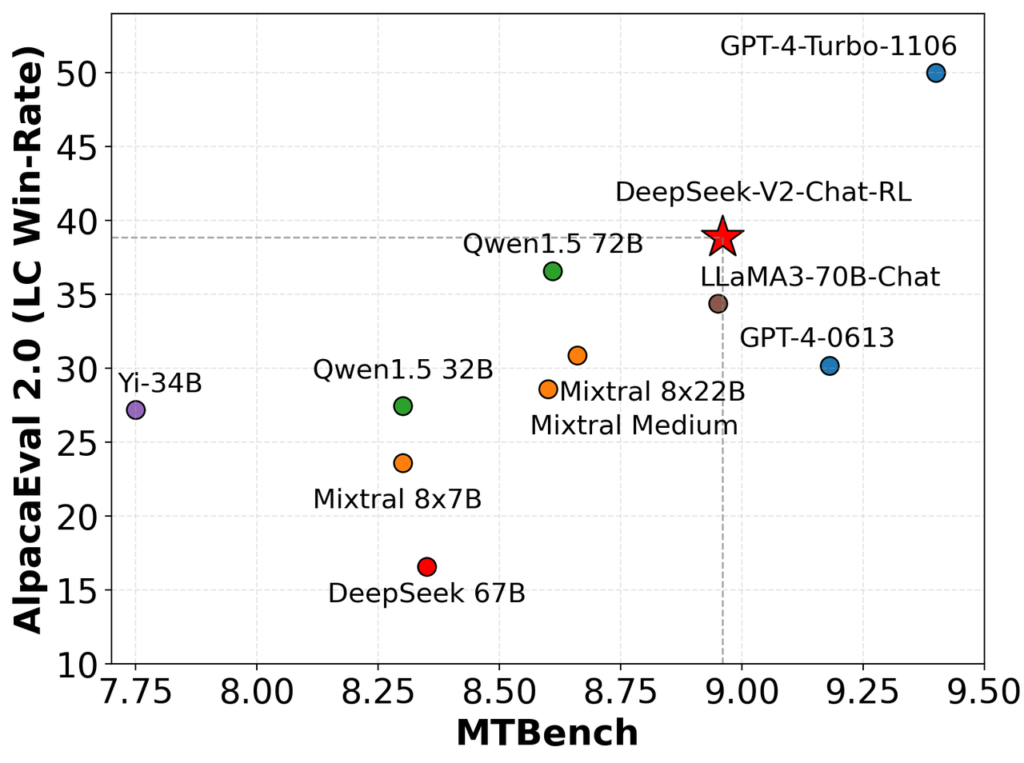

English Open Ended Generation Evaluation

4. Ethical AI and Bias Mitigation

- Advanced Bias Detection: DeepSeek v2 might include more sophisticated mechanisms for detecting and mitigating biases in its outputs.

- Transparency and Explainability: Enhanced features for explaining how the model generates responses, ensuring greater transparency.

Standard Benchmark

| Benchmark | Domain | QWen1.5 72B Chat | Mixtral 8x22B | LLaMA3 70B Instruct | DeepSeek-V1 Chat (SFT) | DeepSeek V2 Chat (SFT) | DeepSeek V2 Chat (RL) |

|---|---|---|---|---|---|---|---|

| MMLU | English | 76.2 | 77.8 | 80.3 | 71.1 | 78.4 | 77.8 |

| BBH | English | 65.9 | 78.4 | 80.1 | 71.7 | 81.3 | 79.7 |

| C-Eval | Chinese | 82.2 | 60.0 | 67.9 | 65.2 | 80.9 | 78.0 |

| CMMLU | Chinese | 82.9 | 61.0 | 70.7 | 67.8 | 82.4 | 81.6 |

| HumanEval | Code | 68.9 | 75.0 | 76.2 | 73.8 | 76.8 | 81.1 |

| MBPP | Code | 52.2 | 64.4 | 69.8 | 61.4 | 70.4 | 72.0 |

| LiveCodeBench (0901-0401) | Code | 18.8 | 25.0 | 30.5 | 18.3 | 28.7 | 32.5 |

| GSM8K | Math | 81.9 | 87.9 | 93.2 | 84.1 | 90.8 | 92.2 |

| Math | Math | 40.6 | 49.8 | 48.5 | 32.6 | 52.7 | 53.9 |

5. Integration with DeepSeek Framework

- Optimized Resource Usage: DeepSeek v 2 could be further optimized for efficiency, reducing computational costs and energy consumption.

- Scalability: Improved scalability for handling large-scale deployments across industries.

6. New Features and Capabilities

- Multimodal Support: DeepSeek- v 2 might extend beyond text to support multimodal inputs (e.g., text, images, and audio).

- Interactive Learning: The model could incorporate interactive learning capabilities, allowing it to improve based on user feedback in real time.

7. Real-World Applications

DeepSeek v 2 would likely be designed to address even more complex and diverse use cases, such as:

- Advanced Customer Support: More sophisticated chatbots capable of handling nuanced customer queries.

- Creative Content Generation: Enhanced capabilities for generating creative content like stories, scripts, and marketing materials.

- Scientific Research: Assisting researchers with data analysis, hypothesis generation, and report writing.

How DeepSeek v2 Could Compare to Its Predecessor

| Feature | DeepSeek LLM | DeepSeek-v2 (Speculated) |

|---|---|---|

| Accuracy | High | Higher |

| Multilingual Support | Multiple languages | Expanded language support |

| Customizability | Fine-tuning for specific domains | Enhanced fine-tuning and adaptability |

| Ethical AI | Bias mitigation and transparency | Advanced bias detection and explainability |

| Efficiency | Optimized for performance | Further optimizations for cost and speed |

| Scalability | Suitable for large-scale deployments | Improved scalability |

| New Features | Text-based | Multimodal and interactive capabilities |

Chinese Open Ended Generation Evaluation

Alignbench (https://arxiv.org/abs/2311.18743)

| 模型 | 开源/闭源 | 总分 | 中文推理 | 中文语言 |

|---|---|---|---|---|

| gpt-4-1106-preview | 闭源 | 8.01 | 7.73 | 8.29 |

| DeepSeek-V2 Chat (RL) | 开源 | 7.91 | 7.45 | 8.35 |

| erniebot-4.0-202404 (文心一言) | 闭源 | 7.89 | 7.61 | 8.17 |

| DeepSeek V2 Chat (SFT) | 开源 | 7.74 | 7.30 | 8.17 |

| gpt-4-0613 | 闭源 | 7.53 | 7.47 | 7.59 |

| erniebot-4.0-202312 (文心一言) | 闭源 | 7.36 | 6.84 | 7.88 |

| moonshot-v1-32k-202404 (月之暗面) | 闭源 | 7.22 | 6.42 | 8.02 |

| Qwen1.5-72B-Chat (通义千问) | 开源 | 7.19 | 6.45 | 7.93 |

| DeepSeek-67B-Chat | 开源 | 6.43 | 5.75 | 7.11 |

| Yi-34B-Chat (零一万物) | 开源 | 6.12 | 4.86 | 7.38 |

| gpt-3.5-turbo-0613 | 闭源 | 6.08 | 5.35 | 6.71 |

Contact:

If you have any questions, feel free to open an issue or reach out to us at service@deepseek.com.

Final Thoughts

While specific details about DeepSeek v 2 are not publicly available as of October 2023, it is reasonable to assume that it would represent a significant upgrade over the original DeepSeek LLM. With advancements in accuracy, efficiency, and functionality, DeepSeek-v2 could further solidify DeepSeek’s position as a leader in AI-powered language models.

If you’re looking for official information about DeepSeek v 2, I recommend checking DeepSeek’s official website, press releases, or research publications for updates. Let me know if you’d like help with anything else! 😊